Elementary Introduction

Finite state machines appear in a variety of instantiations: mechanical, electronic, fluidic. The physical mechanisms involved necessitate that the design is described by differential equations, but ultimately the manipulation of abstracted “logical” states is the final goal. Thus we can describe the architecture of a general finite state machine with ![]() algebra (or other finite rings too).

algebra (or other finite rings too).

Gates and Polynomials

Typically you will see a logic gate defined by its values on all combinations of inputs as a “truth table”:

And statements written with logical connectives:

![]()

Along with distributive laws:

![]()

De Morgan’s laws:

All of which apply to more complicated sentences rather than just individual variables. These laws along with commutative and associative laws are sufficient to evaluate and simplify any general logical expression, however we contend that this is the wrong language for computing and makes other important aspects – the dynamics and algebra – obscure.

There is one thing we can extract from logical connectives before moving on. The disjunctive normal form allows us to read truth tables and directly translate them into connective formulae which we can use later. Let us look at a different example which will help us escape the artificiality of AND and OR.

XOR is only “true” or 1 when x or y but not both, are 1. Disjunctive normal form says that we can view the x, y entries as unary operators which return the input with no change, combine these as given on the lines which evaluate to 1, and take the OR of all of them for the total connective form of the truth table.

Here is the second line: ![]()

The total is:

This process can be viewed as a sum of “elementary functions” which are only 1 on one line each, and building a general function/table. We utilize the fact that AND(x,y) is only 1 for a single combination of x and y, and that OR can be used to superimpose these outputs. For the rest of a brief introduction to logic, see the beginning of these lectures by Schuller.

In comparison, we have the addition and multiplication tables for ![]()

These rules are derived from addition and multiplication of integers with those given remainders after division by 2, reviewed in Lang’s Basic Mathematics ch 1, later formalized in algebra via quotients of groups and rings. At this point we should also note the important basic fact about binary/boolean valued functions on sets, that they classify subsets; The subset a binary function indicates is given as the preimage of 1. A combinatorial computation shows that a set X with ![]() elements has

elements has ![]() subsets. We achieve a great simplification using polynomials with binary coefficients:

subsets. We achieve a great simplification using polynomials with binary coefficients:

NOT(x) can formally be regarded as a two-variable polynomial which is constant in y, for simplicity here. The set of all such polynomials is standardly notated ![]() . Note that when evaluating the polynomial

. Note that when evaluating the polynomial ![]() always evaluates identically to

always evaluates identically to ![]() , equivalently

, equivalently ![]() . Thus we reduce this polynomial set as a ring quotient to

. Thus we reduce this polynomial set as a ring quotient to ![]() , meaning that any term of the form

, meaning that any term of the form ![]() evaluates to 0 or

evaluates to 0 or ![]() to

to ![]() . These sets of polynomials which evaluate to 0 are referred to as “boolean ideals” and will be important for understanding how to generalize to

. These sets of polynomials which evaluate to 0 are referred to as “boolean ideals” and will be important for understanding how to generalize to ![]() -valued gates. This gives a four-way equivalence between binary valued functions on n binary inputs:

-valued gates. This gives a four-way equivalence between binary valued functions on n binary inputs: ![]() (exponentiation of a set denotes an n-fold cartesian product), subsets of that set, propositions constructed out of connectives, and the polynomials in the quotient ring

(exponentiation of a set denotes an n-fold cartesian product), subsets of that set, propositions constructed out of connectives, and the polynomials in the quotient ring ![]() .

.

Let’s check this computation in ![]() , by regarding it as a vector space over the binary field

, by regarding it as a vector space over the binary field ![]() . The number of its basis monomials in each degree are:

. The number of its basis monomials in each degree are:

With the sum of cardinalities being binomial coefficients:![]()

Considering their linear combinations, the cardinality of ![]() must be

must be ![]() , which is the number of subsets of

, which is the number of subsets of ![]() and thus the number of binary valued logic gates on n-variables. Next we consider logic gates with multiple outputs. A truth table with multiple output columns can be seen as a tuple of polynomials, so we just take

and thus the number of binary valued logic gates on n-variables. Next we consider logic gates with multiple outputs. A truth table with multiple output columns can be seen as a tuple of polynomials, so we just take ![]() as this general set of n-input k-output gates. To see how composition works, let’s take in an example

as this general set of n-input k-output gates. To see how composition works, let’s take in an example ![]() . Since these gates are multivalued, they are really tuples of polynomials:

. Since these gates are multivalued, they are really tuples of polynomials:

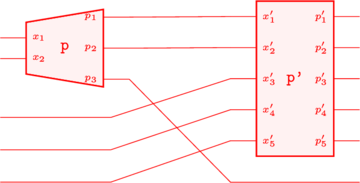

To “connect” the first two outputs of p to the first two inputs of p’, we can apply substitution:

Again, the last polynomial can be taken to be in ![]() but constant in

but constant in ![]() . It is considered an output since it is left uncomposed. A simpler visual mnemonic of this composition:

. It is considered an output since it is left uncomposed. A simpler visual mnemonic of this composition:

The result being an element of ![]() . Already this is resembling some sophisticated algebra: operads, tensor composition, multicategories, quantum circuits. It is more accurate to say operads are based on this kind of polynomial composition, a later article will detail how we see this composition in quantum computing via tensors. If rings describe the algebraic structure coming from addition and multiplication of gates via polynomials, multicategories/circuit notation might describe the complicated possibilities for composition. But we will stay more concrete for the purpose of motivating these further things better, and explaining practical uses of these ideas.

. Already this is resembling some sophisticated algebra: operads, tensor composition, multicategories, quantum circuits. It is more accurate to say operads are based on this kind of polynomial composition, a later article will detail how we see this composition in quantum computing via tensors. If rings describe the algebraic structure coming from addition and multiplication of gates via polynomials, multicategories/circuit notation might describe the complicated possibilities for composition. But we will stay more concrete for the purpose of motivating these further things better, and explaining practical uses of these ideas.

Finally, see that ![]() is universal in that every gate can be constructed from compositions with itself. First in

is universal in that every gate can be constructed from compositions with itself. First in ![]() :

:

The reader can also verify that the earlier gate, XOR, corresponds to summing variables and construct it via its disjunctive normal form. Since NAND was able to build NOT, AND, then OR, it is called universal as it then can build any two variable gate. Some other two variable gates are universal, but NAND is the easiest to build. There are then two ways to build any n-input 1-output gate as follows:

Consider a more general disjunctive normal form in n-variables, where an iterated AND is taken over each whole row and OR between them as before.

Or utilizing a basic result from polynomial theory with coefficients in a ring ![]() :

: ![]() . This notation makes sense because sets of polynomials with coefficients in a ring are themselves rings that one can take coefficients over. Building the polynomials in one-variable more is then done by multiplying the new monomial by old polynomials via their AND, and adding these more general terms with XOR. Some important gates will appear in the appendix.

. This notation makes sense because sets of polynomials with coefficients in a ring are themselves rings that one can take coefficients over. Building the polynomials in one-variable more is then done by multiplying the new monomial by old polynomials via their AND, and adding these more general terms with XOR. Some important gates will appear in the appendix.

Registers and Difference Equations

An important element of computers is sequential operation. This requires a notion of time which both supplies persistent memory, and the ability to reuse gates with new inputs at different times. The corresponding elementary time-dependent unit is called a data flip-flop or DFF, and it is structured like a logic gate with one input and one output:

![]()

It operates on binary-valued functions of integer time, rather than binary variables as gates do. See that it outputs a shift of the input, we can use DFFs to introduce controlled delay into our programs and hardware. Henceforth, combinations of DFFs and gates will be called circuits. One particularly simple circuit is the 1-bit register:

![Rendered by QuickLaTeX.com \begin{align*}&BIT(in(t),load(t))=out(t) \\\\=&DFF(MUX(in(t),out(t),load(t)) \\\\=&in(t-1)l(t-1)\\+&out(t-1)[l(t-1)+1]\end{align*}](https://sheafification.com/wp-content/ql-cache/quicklatex.com-c6f77e57b65743b50a7dd5b3a7eb592d_l3.png)

MUX is a gate with three inputs, which uses the load to determine whether it outputs one or the other of the two, at that instant in time.

The register is our first example of memory, as when ![]() , the output at

, the output at ![]() is the same as for

is the same as for ![]() . These functions of time can also be viewed as polynomials in infinitely many variables.

. These functions of time can also be viewed as polynomials in infinitely many variables.

Architectures and Programs

The idea of storing a program can be credited to a Mathematician, John Von Neumann. While modern architectures and operating systems make many compromises to appeal to different uses, Von Neumann’s architecture is the simplest case we want to study in order to go from mathematics to explicit programs.

The claim that Von Neumann should be credited with the stored program concept is questionable. People had worked on that sort of thing prior to VN. For example: https://en.m.wikipedia.org/wiki/J._Presper_Eckert

Hi ‘No,’ thank you for the feedback. I have heard this before, but there is no great confirmation of this fact. Every main resource for computing or its history (even MIT courses and webpages) all confirm that it was Von Neumann. If it is true, it is still unlikely it will ever be completely confirmed, and it is besides the point. I am working towards examining some simple hardware and algorithms following the physics-based history of computing such as the early implementations of Monte-Carlo for the Manhattan project and numerical differential equations applications.